The United States is entering the home stretch of the 2024 presidential election, arguably one of the most important elections in its history. In this crucial moment, with a predicted close margin and the rapid growth of AI, the potential influence of AI on this election is weighing heavily on the minds of many.

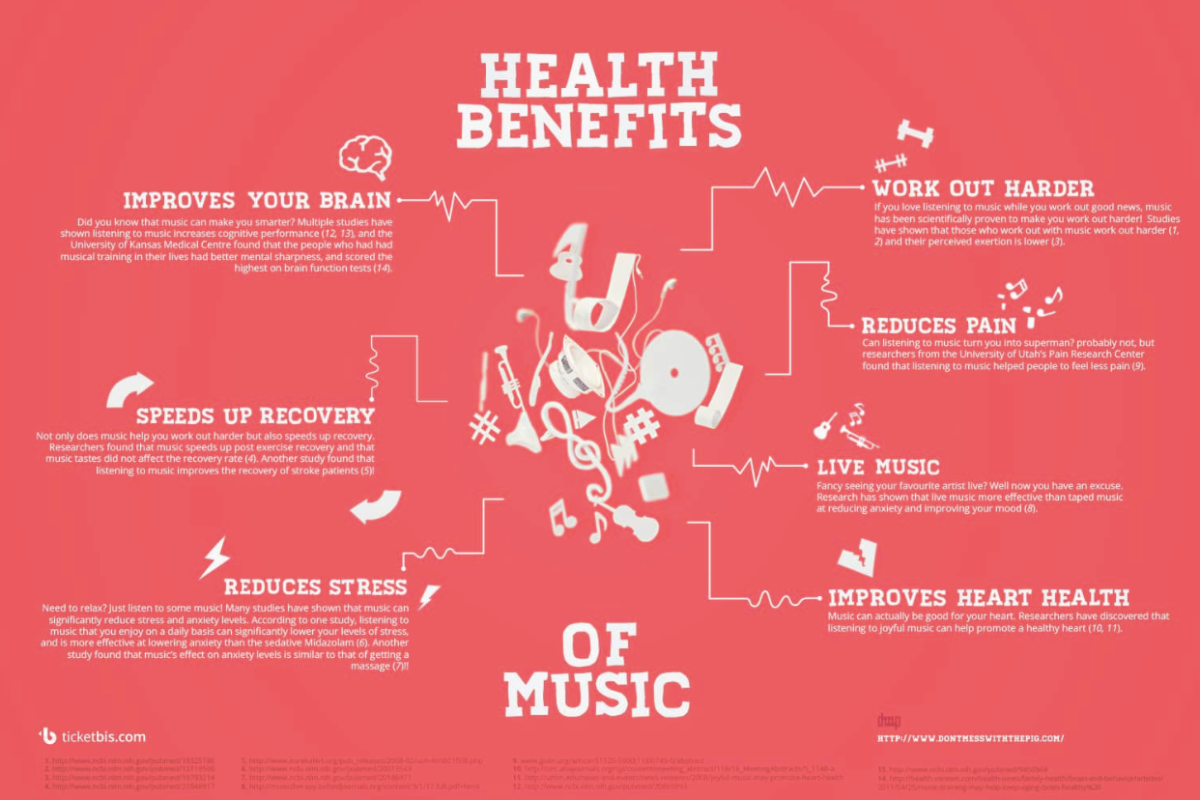

Although AI provides unique opportunities to improve electoral efficiency and voter engagement, it can also be misused to manipulate the election outcome.

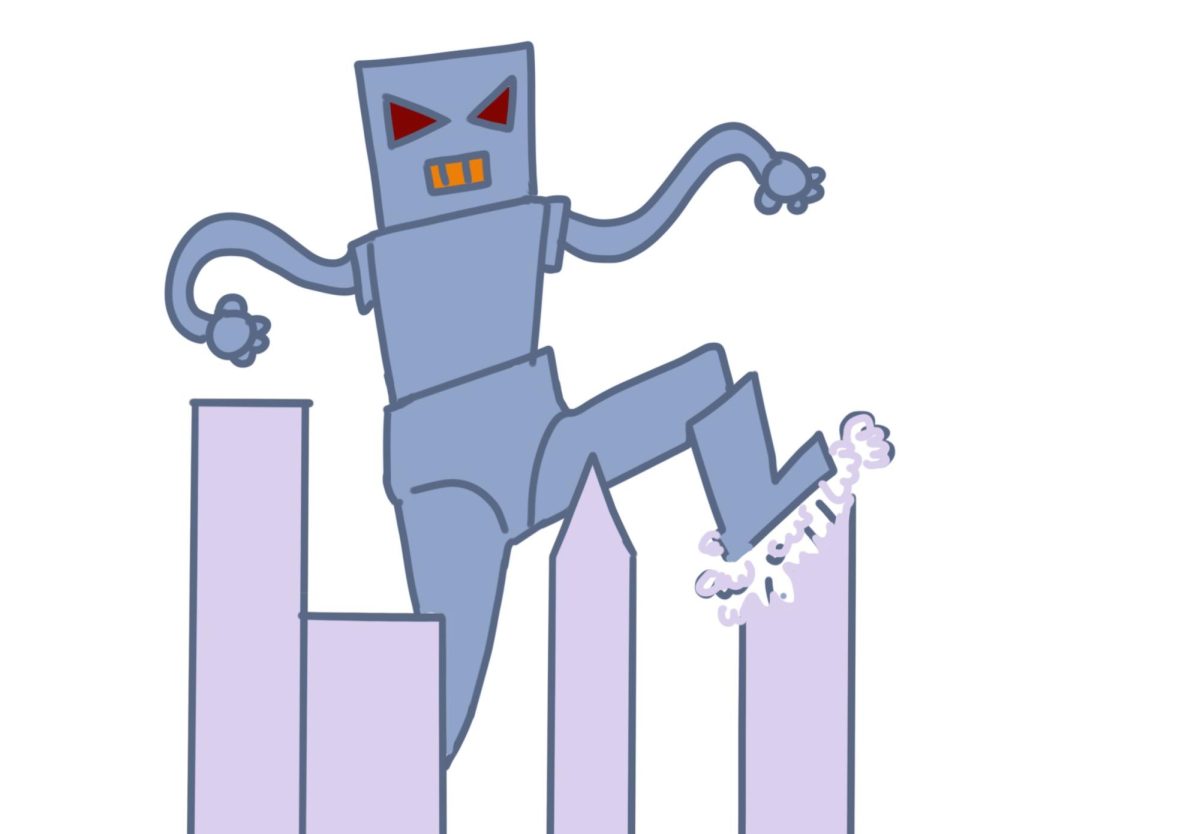

There are many ways artificial intelligence can be used to influence voters. AI can be utilized to exploit data to influence voters’ opinions. AI’s ability to launch cyber attacks, create deepfakes, and spread misinformation can undermine democracy, lessen the integrity of political discourse, and gradually destroy public trust.

For example, Donald Trump circulated an AI-generated advertisement of Taylor Swift endorsing his campaign, along with AI-generated photos of women wearing T-shirts saying “Swifties for Trump” on the platform Truth Social.

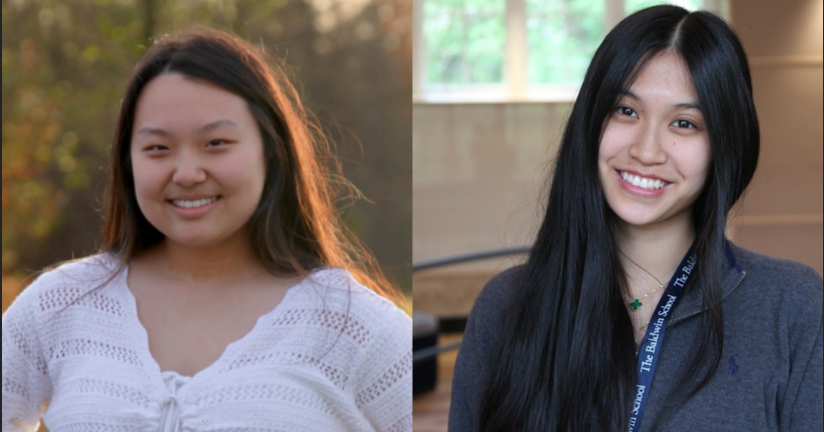

Emily Sidlow ‘25, a registered voter, said the authenticity and virality of this post worried her.

As artificial intelligence grows exponentially, detection software and regulation policies cannot keep up. This has and is continually leading to more advanced deceptive AI-created content.

The exploitation of AI in elections has already occurred in countries worldwide. Just before Slovakia’s presidential election, a fake audio recording of the leading candidate saying he had rigged the election was posted on Facebook and went viral. He ended up losing the election.

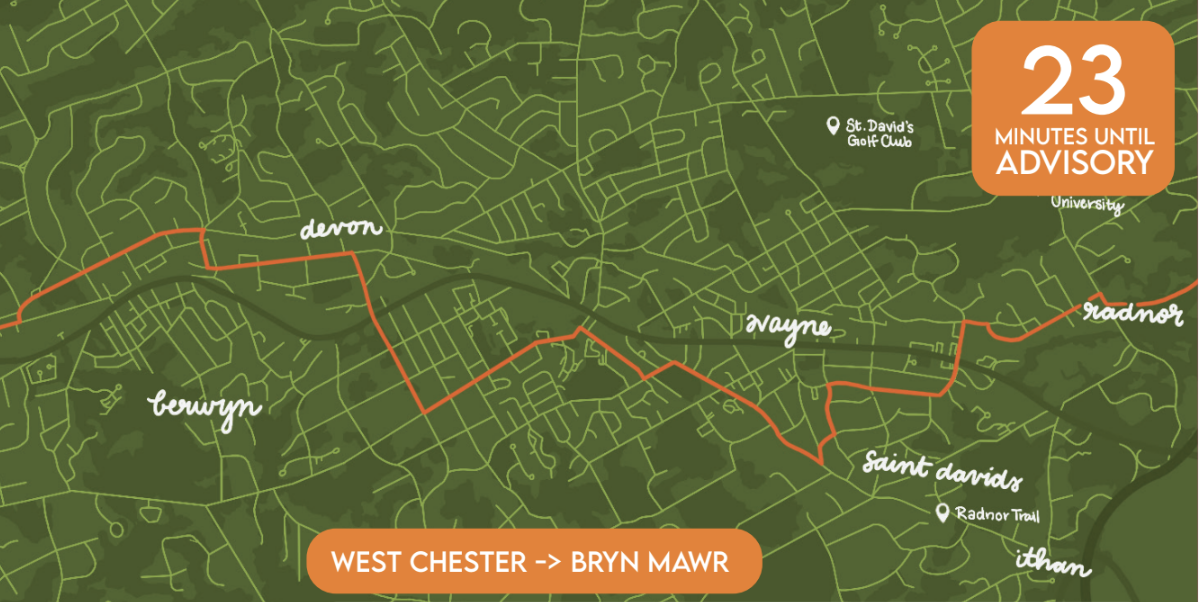

Before the New Hampshire primary this year, AI-generated, very realistic robocalls imitating Joe Biden’s voice were sent to thousands of New Hampshire residents, advising them against voting in the primary election. Eventually, the culprit behind the robocalls was fined six million dollars and faced more than two dozen criminal charges.

Hafsa Kanchwala ‘25 will be eligible to vote in the upcoming election, and she said she worries about AI’s realism and broad reach.

“I honestly do not believe I would be able to [identify the AI content] in all instances, as AI has allowed people to have fake identities which makes identifying it very difficult,” she said.

Sidlow said she sometimes struggles to identify real versus AI content.

“It depends on what form of AI is being used,” she said. “Sometimes AI-generated images are easily identifiable and other times they are very deceptive.”

Sidlow was presented with a selection of nine political images of candidates Donald Trump and Kamala Harris. Some were AI-generated, and others were real. Some of the fake photos included Trump being dragged by the police and Harris wearing a red hat with a hammer and sickle.

Sidlow was able to identify eight out of the nine correctly. Though AI may not be advanced enough to trick young voters like Sidlow yet, it is still at the beginning of its development.

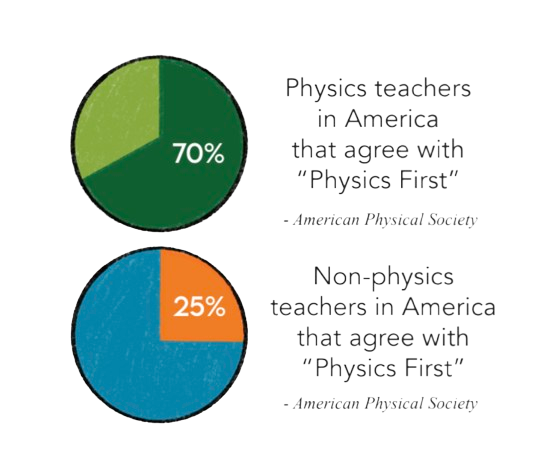

A study conducted by Nexcess showed that 18- to 24-year-olds could detect images generated by artificial intelligence 61% of the time, but 45- to 54-year-olds could only 51% of the time.

It is important to be cautious when taking in content about this election. We must work to combat these challenges by publishing corrective articles as quickly as possible, strengthening online safeguards, and implementing legislation that prohibits this type of misleading political advertising.